- cross-posted to:

- pcgaming@lemmy.ca

- cross-posted to:

- pcgaming@lemmy.ca

Gee, we’ve had over a half century of computer graphics at this point. However, suddenly when a technology arises that requires obscene amount of GPU’s to generate a results a GPU manufacturer is here to tell us that all computer graphics without that new technology is dead for… reasons. I cannot see any see any connections between these points.

What do you mean “suddenly”? I was running path tracers back in 1994. It’s just that they took minutes to hours to generate a 480p image.

The argument is that we’ve gotten to the point where new rendering features rely on a lot more path tracing and light simulation that used to not be feasible in real time. Pair that with the fact that displays have gone from 1080p60 vsync to 4K at arbitrarily high framerates and… yeah, I don’t think you realize how much additional processing power we’re requesting.

But the good news is if you were happy with 1080p60 you can absolutely render modern games like that in a modern GPU without needing any upscaling.

I think you just need to look at the PS5 Pro as proof that more GPU power doesn’t translate linearly to better picture quality.

The PS5 Pro has a 67% beefier GPU than the standard PS5 - with a price to match - yet can anyone say the end result is 67% better? Is it even 10% better?

We’ve been hitting diminishing returns on raw rasterising for years now, a different approach is definitely needed.

Yeah, although I am always reluctant to quantify visual quality like that. What is “65% better” in terms of a game playing smoothly or looking good?

The PS5 Pro reveal was a disaster, partially because if you’re trying to demonstrate how much nicer a higher resolution, higher framerate experience is, a heavily compressed, low bitrate Youtube video that most people are going to watch at 1080p or lower is not going to do it. I have no doubt that you can tell how much smoother or less aliased an image is on the Pro. But that doesn’t meant the returns scale linearly, you’re right about that. I can tell a 4K picture from a 1080p one, but I can REALLY tell a 480p image from a 1080p one. And it’s one thing to add soft shadows to a picture and another to add textures to a flat polygon.

If anything, gaming as hobby has been a tech thing for so long that we’re not ready to have shift to being limited by money and artistic quality rather than processing power. Arguably this entire conversation is pointless in that the best looking game of 2024 is Thank Goodness You’re Here, and it’s not even close.

Yeah, there’s a reason any movie attempting 3D CG with any budget at all has used path tracing for years. It’s objectively massively higher quality.

You don’t need upscaling or denoising (the “AI” they’re talking about) to do raster stuff, but realistic lighting does a hugely better job, regardless of the art style you’re talking about. It’s not just photorealism, either. Look at all Disney’s animated stuff. Stuff like Moana and Elemental aren’t photorealistic and aren’t trying to be, but they’re still massively enhanced visually by improving the realism of the behavior of light, because that’s what our eyes understand. It takes a lot of math to handle all those volumetric shots through water and glass in a way that looks good.

Yep. The thing is, even if you’re on high end hardware doing offline CGI you’re using these techniques for denoising. If you’re doing academic research you’re probably upscaling with machine learning.

People get stuck on the “AI” nonsense, but ultimately you need upscaling and denoising of some sort to render certain tier of visuals. You want the highest quality version of that you can fit in your budgeted frame time. If that is using machine learning, great. If it isn’t, great as well. It’s all tensor math anyways, it’s about using your GPU compute in the most efficient way you can.

Devils advocate: Splatting, dlss, neural codecs to name a few things that will change the way we make games

DLSS doesn’t work that well. I’m not looking forward to AI replacing artist’s work.

I’m not sure I agree with you on the former, DLSS is pretty remarkable in its current iteration

Agreed, things like DLSS are the right kind of application of AI to games, same with frame generation. The wrong kind is trying to figure out how to replace developers, artists of every kind, actors, etc in the production process with AI. That being said though, companies like Nvidia absolutely can and will profit off making sure that a game cannot run well on anything but the latest hardware that they sell, so the whole “you need to buy our stuff to play games because it has the good ai and now all games require the good ai” is capitalist bullshit

Ray tracing actually will directly change the way games are made. A lot of time is spent by artists placing light sources and baking light maps to realistically light scenery - with ray tracing, you get that realism “for free”.

DF did a really interesting video on the purely path traced version of Metro: Exodus and as part of that, the artists talked about how much easier and faster it was to build that version.

I think what he means is that AI is needed to keep making substantial improvements in graphic quality, and he phrased it badly. Your interpretation kind of presumes he’s not only lying, but that he thinks we’re all idiots. Given that he’s not running for office as a Republican, I think that’s a very flawed assumption.

And I don’t get why they would use it for graphics instead of using an AI coprocessor to do interesting stuff in games, like generating dialogue, complex missions, smarter NPCs, maps, etc.

You could build worlds where stuff happens that isn’t just governed by randomly doing stuff based on triggers.

Oh, now you’re wrong. AI upscaling is demonstrably more accurate than plain old TAA, which is what we used to use in the previous generation. I am NOT offloading compelling NPC dialogue to a crappy chatbot. Every demo I’ve seen for that application has been absolutely terrible.

Because as complex and hard to decipher as it is, all AI does is inference. Doing inference with graphics is something that has been perfected through decades and is worked on heavily.

Quests and dialogues rely more on the creative thinking of writers, having a satisfying side quest is quite hard and leaving a text generator engine that task is a huge pitfall. It has it’s use in generating some bulk texts that can then be proofread, but generating text live? Hell no. Plus, what about voice acting? Will you also steal actors voices so that the garbage text generated is said by “not scarlet johansson” v3? If you think AI will generate smarter behaviours your definition of smart and mine really differ. Will AI be able to rig animations and infer correct rig positions so that the NPC features line up with what they are saying? Mocap and voice acting is done heavily in big productions to get that.

Sounds like a bad thing tbh.

RIP the future of high end computer graphics. 1972 to 2024. You had a good run.

This feels like its establishing a precedent for widespread adoption/implementation of AI into consumer devices. Manufactured consent.

“We compute one pixel… we hallucinate, if you will, the other 32.”

Between this and things like Sora, we are doomed to drown in illusions of our own creation.

If the visuals are performant and consistent, why do we care? I have always been baffled by the obsession with “real pixels” in some benchmarks and user commentary.

AI upscales are so immediately obvious and look like shit. Frame “generation” too. Not sour grapes, my card supports FSR and fluid motion frames, I just hate them and they are turned off.

DLSS and FSR are not comparable.

“FSR looks like shit” is not the same thing as “upscaling looks like shit”.

That’s fine, but definitely not a widespread stance. Like somebody pointed out above, most players are willing to lose some visual clarity for the sake of performance.

Look, I don’t like the look of post-process AA at all. FXAA just seemed like a blur filter to me. But there was a whole generation of games out there where it was that or somehow finding enough performance to supersample a game and then endure the spotty compatibility of having to mess with custom unsupported resolutions and whatnot. It could definitely be done, particularly in older games, but for a mass market use case people would turn on SMAA or FXAA and be happy they didn’t have to deal with endless jaggies on their mid-tier hardware.

This is the same thing, it’s a remarkably small visual hit for a lot more performance, and particularly on higher resolution displays a lot of people are going to find it makes a lot of sense. Getting hung up on analyzing just “raw” performance as opposed of weighing the final results independently of the method used to get there makes no sense. Well, it makes no sense industry-wide, if you happen to prefer other ways to claw back that performance you’re more than welcome to deal with bilinear upscaling, lower in-game settings or whatever you think your sweet spot it, at least on PC.

That’s because FXAA also sucks. MSAA and downsampling is so far superior. Also ai generated “frames” aren’t performant, it’s fake performance, because as previously mentioned they look like shit, particularly in the way that they make me think about how well I’m running the game instead of playing the game in front of me.

MSAA is pretty solid, but it has its own quirks and it’s super heavy for how well it works. There’s a reason we moved on from it and towards TAA eventually. And DLSS is, honestly, just very good TAA, Nvidia marketing aside.

I am very confused about the concept of “fake perfromance”. If the animation looks smooth to you then it’s smooth. None of it exists in real life. Like every newfangled visual tech, it’s super in-your-face until you get used to it. Frankly, I’ve stopped thinking about it on the games where I do use it, and I use it whenever it’s available. If you want to argue about increased latency we can talk about it, but I personally don’t notice it much in most games as long as it’s relatively consistent.

I do understand the feeling of having to worry about performance and being hyper-aware of it being annoying, but as we’ve litigated up and down this thread, that ship sailed for PC gaming. If you don’t want to have to worry, the real answer is getting a console, I’m afraid.

HEYY!!!

thanks for the real discussion and not memeing. i appreciate you. lemmy appreciates you.

my card supports FSR

Yeah this is “we have DLSS at home”. As someone who tested both, DLSS is the actually good one, FSR is a joke of an imitation that’s just slightly fancier TAA. Try DLSS Quality at 1440p or DLSS Balanced at 4K and you’ll see it’s game-changing.

Ahh, so you don’t really know what you’re talking about.

The brute forcing of AI into anything goes into the next stage.

Good thing I don’t care that much about graphics since I’ve been a teenager.

the premise seems flawed, i think.

i feel what he’s saying is: we suck optimizing gfx performance now because gamers deem ai upscale quality as passable

this feels opposite to what the ps poll says that gamers enable performance mode more because the priority is more stable frames than shiny anti aliasing/post processing.

I don’t see how that’s the case. Most people prefer more fps over image quality, so minor artifacting from DLSS is preferable to the game running much slower with cleaner image quality. That is consistent with the PS data (which wasn’t a poll, to my understanding).

I also dispute the other assumption, that “we suck at optimizing performance”. The difference between now and the days of the 1080Ti when you could just max out games and call it a day, is that we’re targeting 4K at 120fps and up, as opposed to every game maxing out at 1080p60. There is no target for performance on PC anymore, every game can be cranked higher. We are still using CounterStrike for performance benchmarks, running at 400-1000fps. There will never be a set performance target again.

If anything, optimization now is sublime. It’s insane that you can run most AAA games on both a Steam Deck and a 4090 out of the same set of drivers and executables. That is unheard of. Back in the day the types of games you could run on both a laptop and a gaming PC looked like WoW instead of Crysis. We’ve gotten so much better at scalability.

Most people prefer more fps over image quality, so minor artifacting from DLSS is preferable to the game running much slower with cleaner image quality.

I don’t think we’re not much different in this portion. AI upscale is passable enough that gamers will choose it. If presented with a better, non-artifacting option, gamers will choose that since the goal is performance and not AI. If the stat is from PS data, and not from a poll, I think it just strengthens that users want performance more.

There will never be a set performance target again.

It’s not that there’s no set performance target. The difference is merely one, on the CounterStrike era, vs. many, now. Now, there’s more performance targets for PC than Counter Strike days. Games just can’t keep up. Saying “there will never be a set performance target” is just washing hands when a publishers/ directors won’t set directions and priorities which performance point to prioritize.

It might be that your point is optimizing for scalability, and that is fine too.

Yeah, optimizing for scalability is the only sane choice from the dev side when you’re juggling hardware ranging from the Switch and the Steam Deck to the bananas nonsense insanity that is the 4090. And like I said earlier, often you don’t even get different binaries or drivers for those, the same game has to support all of it at once.

It’s true that there are still some set targets along the way. The PS5 is one, the Switch is one if you support it, the Steam Deck is there if you’re aiming to support low power gaming. But that’s besides the point, the PS5 alone requires two to three setups to be designed, implemented and tested. PC compatibility testing is a nightmare at the best of times, and with a host of display refresh rates, arbitrary resolutions and all sorts of integrated and dedicated GPUs from three different vendors expected to get support it’s outright impossible to do granularly. The idea that PC games have become less supported or supportive of scalability is absurd. I remember the days where a game would support one GPU. As in, the one. If you had any other one it was software rendering at best. Sometimes you had to buy a separate box for each supported card.

We got used to the good stuff during the 900 series and 1000 series from Nvidia basically running console games maxed out at 1080p60, but that was a very brief slice of time, it’s gone and it’s not coming back.

Chasing graphics has lead to directions nobody could predict and I’m glad I don’t play these games.

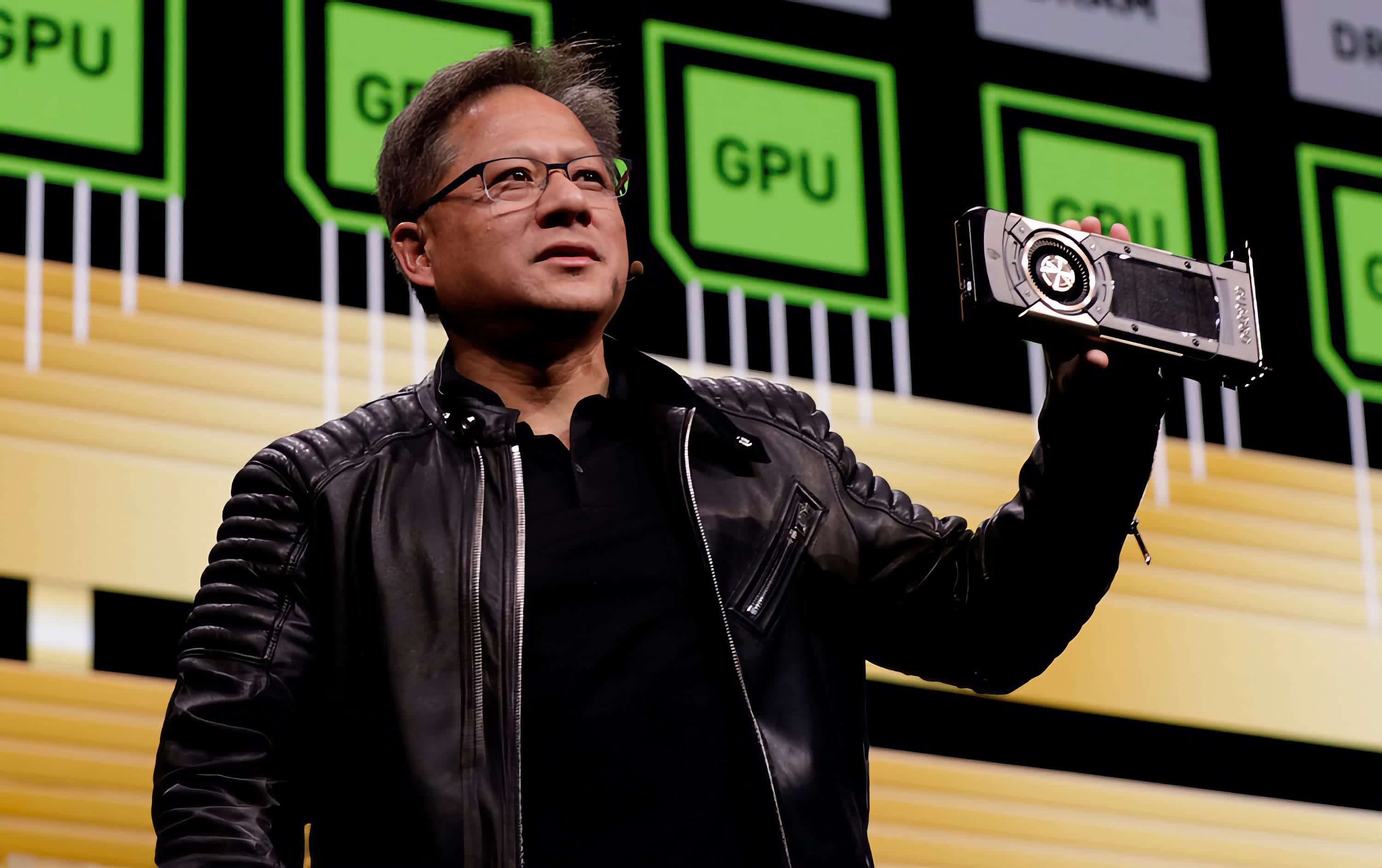

What’s up with the biker jacket?

Techbros

It’s a stupid gimmick like Steve Jobs’ turtleneck.

His version of a weighted blankie.

Oh here he is again, talking AI AI AI… Their stock value is already so high it will take over 50 years for it’s value to match it’s current price.

I been playing old games on private servers. Its been nice.

Star Wars Galaxies or PSO?

Star Wars Galaxies private servers are not a good experience. They don’t allow two players from the same IP, so if you live with someone you want to play with you have to tell them, then send them pictures of BOTH copies of the discs and you and the other person’s hands. They hide behind “preventing gold farmers” but like, who is actually going to be a gold farmer in a private server, and who actually cares if they did? The other 25 players in the server?

Nah, I’m good.

City of Heroes has been golden by comparison.

Oh wow, I haven’t played that in forever… If the community up and running? What’s the update at, are we talking early days, Fire/Fire Defender or different builds altogether? Not even sure where my discs are in the garage. 🤔

This is the webpage for the project. It will have everything you want to know, and if you can’t find it there or the forums, there is also a wiki.

Awesome, thanks. I never would’ve expected it was so simple. 😅

City of Heroes has been golden by comparison.

-homecoming gang represent.

Tell me of these PSO private servers you speak of.

Can’t say there is one, at least not yet. The talks for PSO2.

Unfortunately PSU, as all my friends veto’d me for PSO first. But PSOBB is next, and hopefully by then Team Clementine will have released their PSO2 Original.

You’re lucky to have friends into the same stuff, I couldn’t pay my friends to play an MMO. I got one girlfriend too, but she didn’t like how much I played. I never got to play BB, what’s it like? And PSO2 was my favorite, the memories… A chance to get back into that, dare I say, it almost felt perfect somehow.

Keep an eye on Team Clementine, they’ll launch it eventually. Blue Burst is just PSO with extra content at the end game. Pretty fun theres some private servers that show up first hit with a web search.

Oh sweet, guess I’ll did around and look at the BB servers. Any recommendations?

Unfortunately not, I have yet to truly delve into that myself and I plan on self hosting a BB server.

We can’t do the profitable thing without the more expensive and even more lucrative thing, please, think of our monopoly.

Yes you can. Easily. You’re sitting on the infrastructure to do it.

Pre-built.

I never run the upscaling it looks and feels so bad.

We’re at that point in Brazil where everything behind the screens is devastated and wrecked for profit, and now if you want to see any sign of nature you need a graphics card.

But that’s in California, where anything natural is on fire or is sliding across Malibu into the ocean.

So just a preview unless these people are stopped.

computer graphics has existed for quite long. It seemed possible to do just last year.