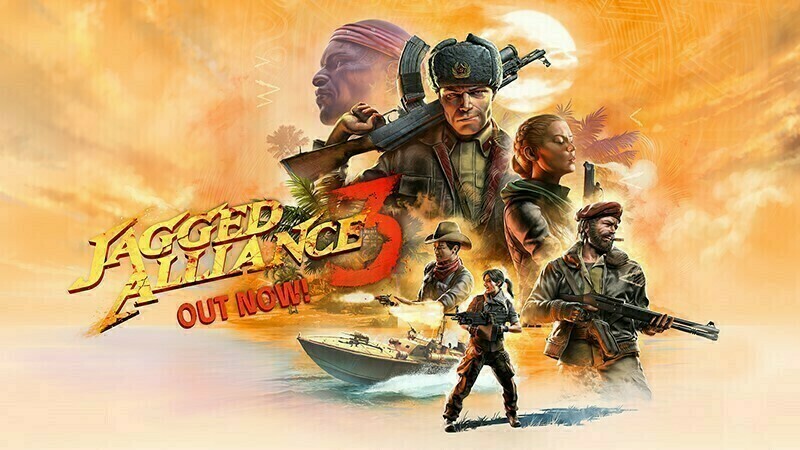

The game has already consumed over 40 hours of my time, and I’ve got plenty more campaign to go. It does just about all the stuff I wanted JA2 to have to make it play faster - combat is faster, looting is faster, inventory is faster. It has a few things that look like X-COM, but it still mostly plays like JA. The early game is the roughest part but things definitely shaped up once I had a team with size, experience and gear.

And the campaign is detailed with a few surprises and plenty of side quests - it does some things to pull the rug on you, which is rude, but rewarding if you play along and accept a few losses(or carefully savescum and go out of your way to avoid triggering timed quests).

Some of my own thoughts, which rebut the article in parts:

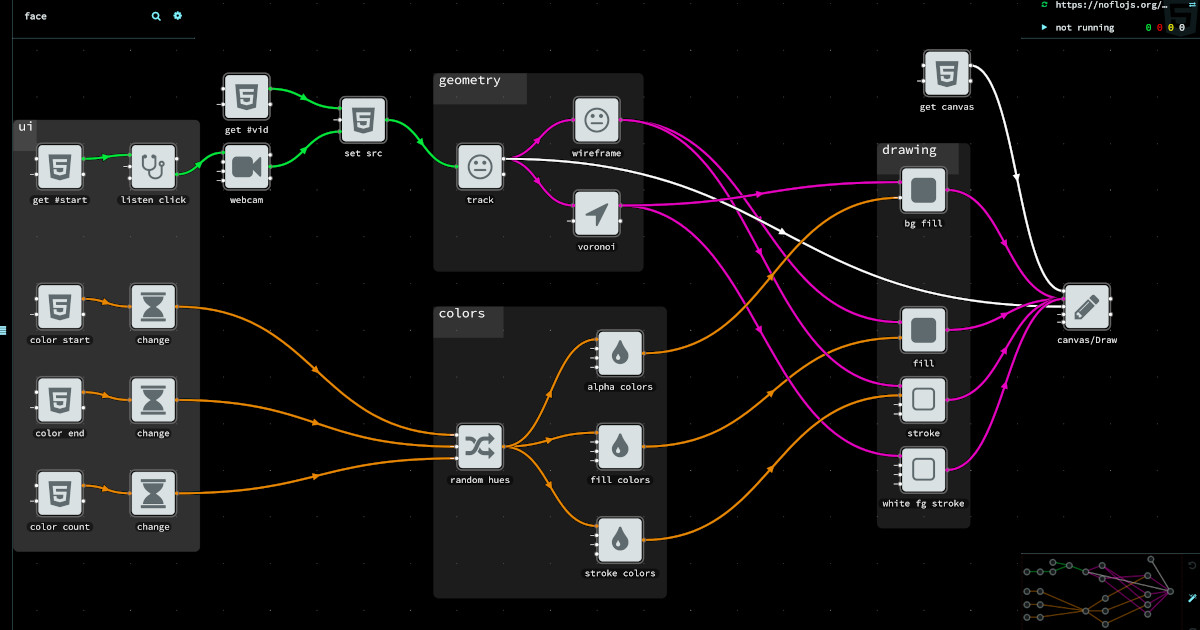

The author’s bio says that they have been doing this as a professional for about 5 years, which, face value, actually means that they haven’t seen the kinds of transitions that have taken place in the past and how widely game scope can vary. The way Godot does things has some wisdom-of-age in it, and even in its years as a proprietary engine(which you can learn something of by looking at Juan’s Mobygames credits the games it was shipping were aiming for the bottom of the market in scope and hardware spec: a PSP game, a Wii game, an Android game. The luxury of small scope is that you never end up in a place where optimization is some broad problem that needs to be solved globally; it’s always one specific thing that needs to be fast. Optimizing for something bigger needs production scenes to provide profiling data. It’s not something you want to approach by saying “I know what the best practice is” and immediately architecting for based on a shot in the dark. Being in a space where your engine just does the simple thing every time instead means it’s easy to make the changes needed to ship.