I must confess to getting a little sick of seeing the endless stream of articles about this (along with the season finale of Succession and the debt ceiling), but what do you folks think? Is this something we should all be worrying about, or is it overblown?

EDIT: have a look at this: https://beehaw.org/post/422907

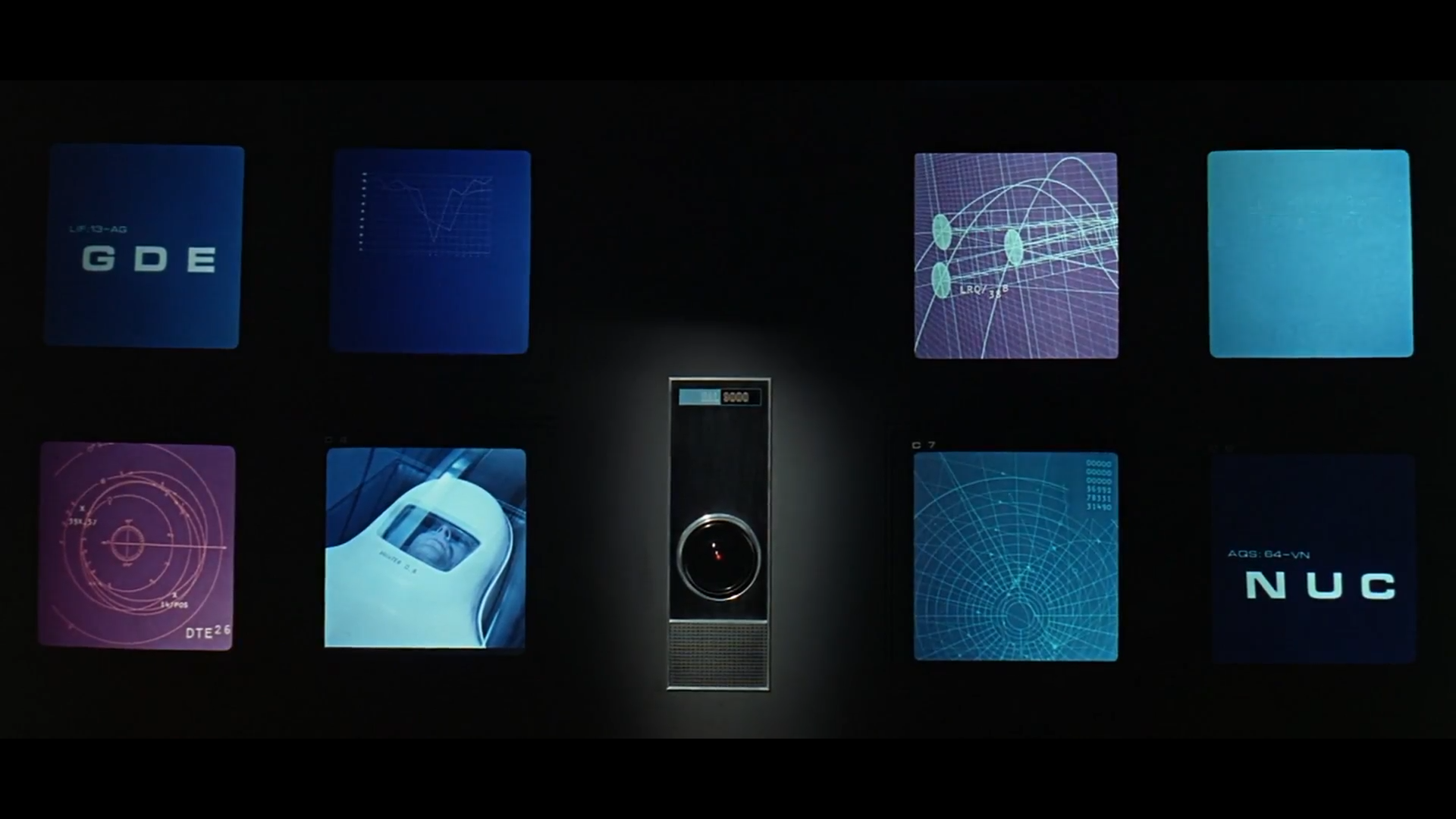

This video by supermarket-brand Thor should give you a much more grounded perspective. No, the chances of any of the LLMs turning into Skynet are astronomically low, probably zero. The AIs are not gonna lead us into a robot apocalypse. So, what are the REAL dangers here?

- As it’s already happening, greedy CEOs are salivating at the idea of replacing human workers with chatbots. For many this might actually stick, and leave them unemployable.

- LLMs by their very nature will “hallucinate” when asked for stuff, because they only have a model of language, not of the world. As a result, as an expert said, “What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be”. And this is a fundamental limitation of all these models, it’s not something that can be patched out of them. So, bots will spew extremely convincing bullshit and cause lots of damage as a result.

- NVidia recently reported the earnings they’ve gotten thanks to all this AI training (as it’s heavily dependent on strong GPUs), a trillion dollars or something like that. This has created a huge gold rush. In USA in particular is anticipated that it will kill any sort of regulation that might slow down the money. The EU might not go that route, and Japan recently went all in on AI declaring that training AIs doesn’t break copyright. So, we’re gonna see an arms race that will move billions of coin.

- Both the art and text AIs will get to the point where they can replace low level people. They’re not any danger towards proper experts and artists, but students and learners will be affected. This will kill entry position jobs. How will the upcoming generations get the experience to actually become trained? “Not my problem” will say the AI companies and their customers. I hope this ends up being the catalyst towards a serious move towards UBI but who knows.

So no, we’re not gonna see Endos crushing skulls, but if measures aren’t taken we’re gonna see inequality go way, WAAAY worse very quickly all around the world.

Hi @jherazob@beehaw.org, finally got around to watching the video, thanks for letting me know about it.👍 One thing that really befuddles me about AI is the fact that we don’t know how it gets from point A to point Z as Mr. Dudeguy mentioned in the video. Why on earth would anyone design something that way? And why can’t you just ask it, “ChatGPT, how did you reach that conclusion about X?” (Possibly a very dumb question, but anyway there it is 🤷).

supermarket-brand Thor

Kyle Hill looks like if Thor and Aquaman had a baby. A very nerdy baby.

A lot of the fearmongering surrounding AI, especially LLMs like GPT, is very poorly directed.

People with a vested interest in LLM (i.e shareholders) play into the idea that we’re months away from the AI singularity, because it generates hype for their technology.

In my opinion, a much more real and immediate risk of the widespread use of ChatGPT, for example, is that people believe what it says. ChatGPT and other LLMs are bullshitters - they give you an answer that sounds correct, without ever checking whether it is correct.

The thing I’m more concerned about is “move fast and break things” techbros implementing these technologies in stupid ways without considering: A) Whether the tech is mature enough for the uses they’re putting it to B) Biases inherited from training data and methods.

LLMs inherit biases from their data because their data is shitloads of people talking and writing, and often we don’t even know or understand the biases without close examination. Trying to apply LLMs or other ML models to things like medicine, policing, or housing without being very careful about understanding the potential for incredible biases in things that seem like impartial data is reckless and just asking for negative outcomes for minorities. And the ways that I’m seeing most of these ML companies try to mitigate those biases seem very much like bandaids as they attempt to rush these products out the gate to be the first out the door.

I’m not at all concerned about the singularity, or GI, or any of that crap. But I’m quite concerned about ML models being applied in medicine without understanding the deep racial and gender inequities that are inherent in medical datasets. I’m quite concerned with any kind of application in policing or security, or anything making decisions about finance or housing or really any area with a history of systemic biases that will show up in a million ways in the datasets that these models are being trained in.

Medicine is quite resistant and has a lot of math and stats nerds already. I know this because I do data science in healthcare. We’re not pushing to overuse AI in poor ways. We definitely get stuff wrong, but I wouldn’t look towards it to worsen inequality.

Low wage jobs that have a high impact on inequality such as governmental jobs, however, are a huge and problematic target. VC will spin up automating away the need for tax auditors, welfare auditors, to replace or reduce the number of law clerks, to proactively identify people to face extra scrutiny by police and child services, etc. This is where we’re gonna create a lot of inequality real fast if we’re not careful. These fields are neither led by, nor have any math and stats nerds to tell them to slow down. They’re also already at risk and frequently on the chopping block, putting a lot of pressure on them to be ‘efficient’.

Hey @Gaywallet, I was hoping I’d see you chime in given your background. I don’t have any particular expertise when it comes to this subject, so it’s somewhat reassuring to see your confidence that folks in the healthcare industry will be more careful than I assume. I work in an adjacent field and know there are a lot of folks doing really good work with ML in healthcare, and that most of those people are very cognizant of the risks. I still worry that there are a lot of spaces in healthcare and especially in areas like claims payment/processing where that care is not going to be taken and folks are going to be harmed.

Ahhh yeah claims and payment processing is typically done by the government or by insurance companies and is definitely a valid risk. They already do as much as they can to automate - they’re mostly concerned with whether they’re making profit or keeping costs down. That is absolutely a sector to be worried about.

Yeah, I work for a company that builds and runs all kinds of healthcare related systems for state and local governments. I work on a Title XIX (Medicaid) account and while we are always looking for ways to increase access, budgets are very tight. One of my concerns is that payors in this space will look to AI as a way to cut costs, without enough understanding or care for the potential risks, and the lowest bidder model that most states are forced into will mean that the vendors that are building and running these systems won’t put in the time or expertise needed to really make sure those risks are accounted for.

When it comes to private insurance, I don’t expect anything from them but absolute commitment to profit over any other concern, and I’m deeply concerned about the ways that they may use AI to try and automate to the detriment of patients, but especially minorities. I absolutely don’t expect somebody like UHC to take the kind of care needed to mitigate those biases when applying AI to their processes and systems.

If its of any console most of these places have systems in place which already automate out most of the work - that article that broke recently about physicians looking at appeals for an average of under one second is an example of how they’re currently doing it. There are some protections in place, but I am also very pessimistic about this sector, and as you have mentioned all sectors which operate under a lowest bidder model.